|

I am a Postdoc at Peking University. I got my PhD from Australian National University and Master from University of Helsinki, both in computer science. My research interest is neural networks, including their theory, architectures and learning algorithms. Email / Google Scholar / Twitter / Github |

|

|

|

|

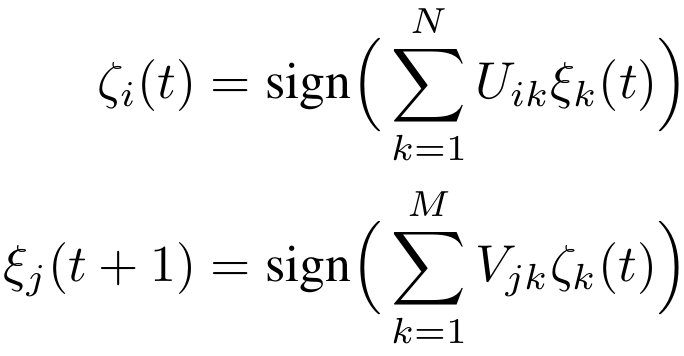

Yao Lu, Si Wu Neural Networks, 2024 [paper] [code]  We pointed out the problem of recurrent networks of only visible binary neurons in generating sequences

and solved it by adding hidden neurons. A three-factor learning algorithm with theoretical guarantees

is proposed to learn sequences as attractors for the networks with hidden neurons.

|

|

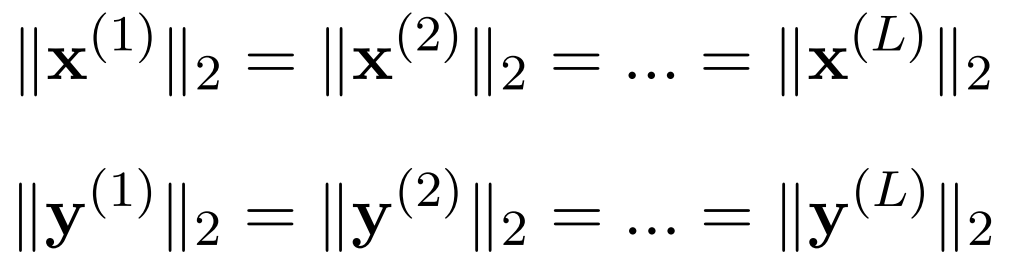

Yao Lu, Stephen Gould, Thalaiyasingam Ajanthan Neural Networks, 2023 [paper] [code] [slides]  We theoretically solve the vanishing/exploding gradients problem in feedforward neural networks. Key idea: constraining signal propagation in both directions via a new class of activation functions and orthogonal weight matrices based on high-dimensional probability theory.

|

|

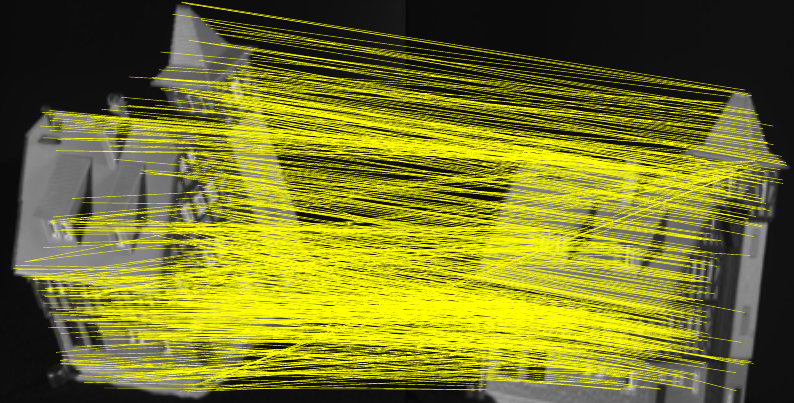

Shihao Jiang, Dylan Campbell, Yao Lu, Hongdong Li, Richard Hartley IEEE International Conference on Computer Vision (ICCV), 2021 [paper] [code]  We obtained the state-of-art optical flow estimation by integrating global motion features to handle occlusion.

|

|

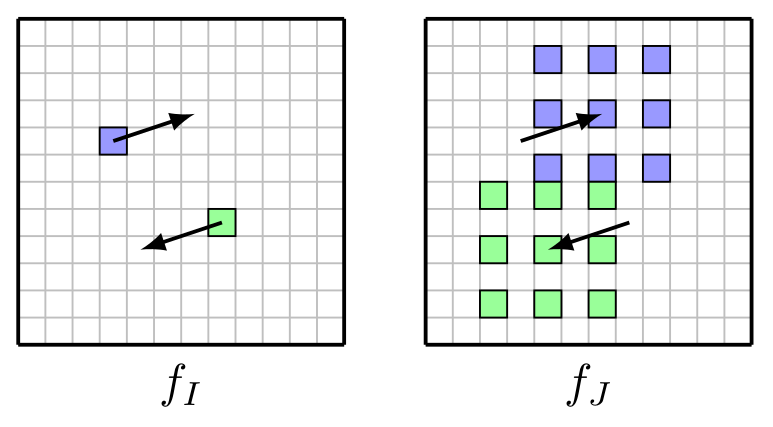

Shihao Jiang, Yao Lu, Hongdong Li, Richard Hartley IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021 [paper] [code]  We found high performance optical flow estimation can be achieved with a few candidate correspondences.

|

|

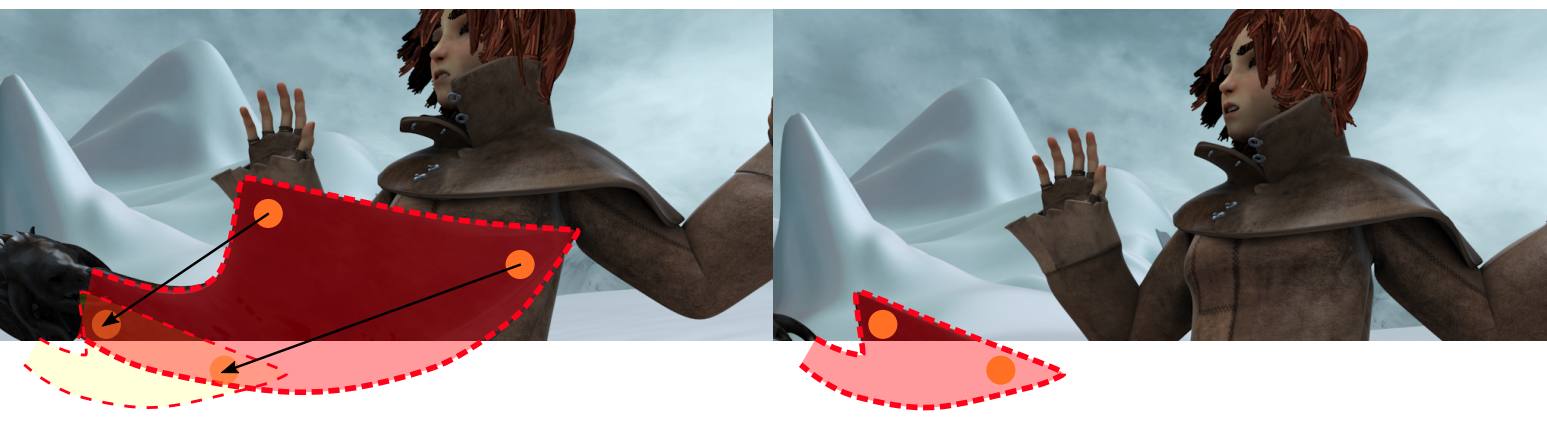

Yao Lu, Jack Valmadre, Heng Wang, Juho Kannala, Mehrtash Harandi, Philip Torr IEEE Winter Conference on Applications of Computer Vision (WACV), 2020 [paper] [code] [slides]  We pointed out the problem of image warping in estimating optical flow and proposed the deformable cost volume to solve the problem.

|

|

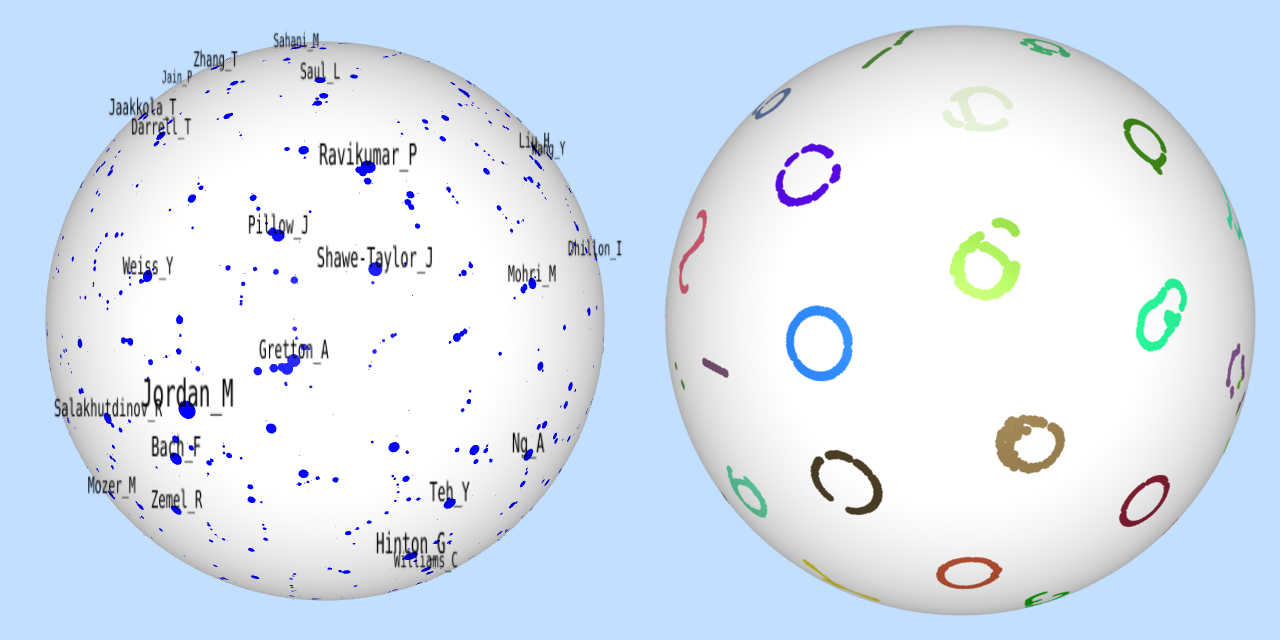

Yao Lu, Jukka Corander, Zhirong Yang Pattern Recognition Letters, 2019 [paper] [code] [demo]  We proposed a new method for data visualization, making it on spheres!

|

|

Yao Lu, Zhirong Yang, Juho Kannala, Samuel Kaski International Joint Conference on Neural Networks (IJCNN), 2019 [paper] [code] [slides]  We proposed a new neural network module, Contrast Association Units, to model the relations between two sets of input variables.

|

|

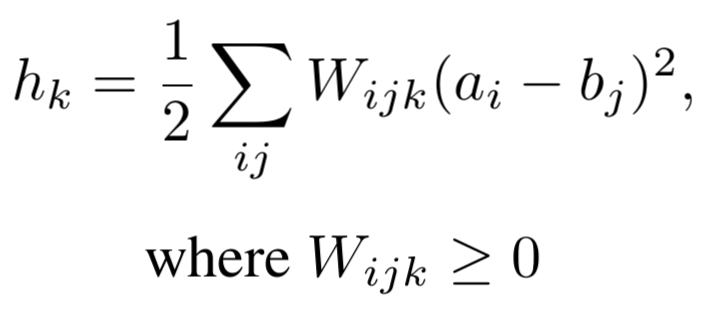

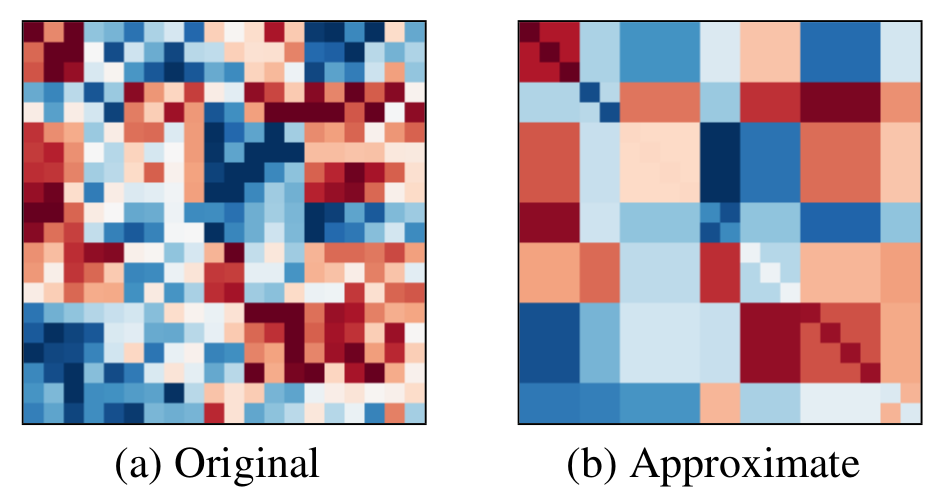

Yao Lu, Mehrtash Harandi, Richard Hartley, Razvan Pascanu ICML workshop on Modern Trends in Nonconvex Optimization for Machine Learning, 2018 [paper] [code] [slides]  We proposed a new matrix approximation method which allows efficient matrix inversion. We then applied the method to second order optimization algorithms for training neural networks.

|

|

Jian Liu, Mika Juuti, Yao Lu, N. Asokan ACM Conference on Computer and Communications Security (CCS), 2017 [paper] [code] We proposed a new method for privacy-preserving predictions with trained neural networks.

|

|

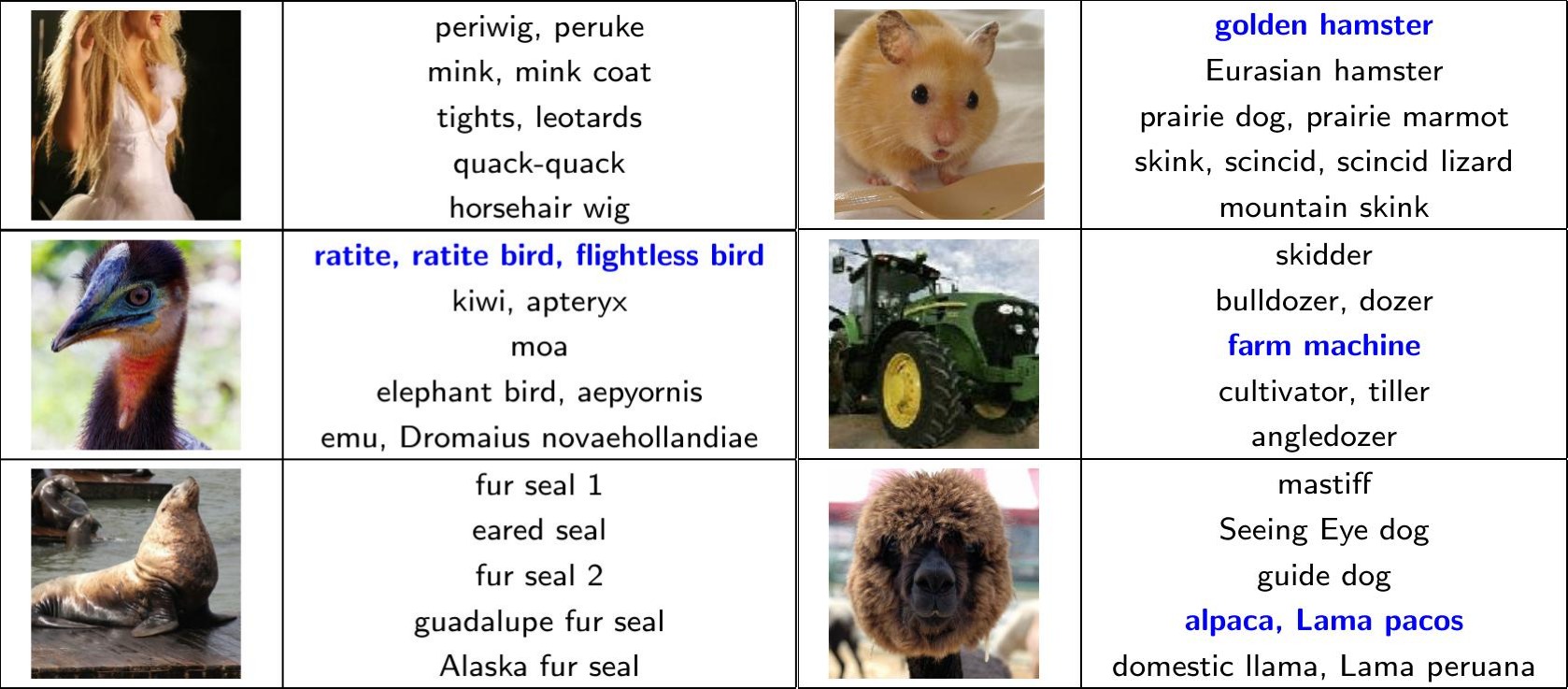

Yao Lu International Joint Conference on Artificial Intelligence (IJCAI), 2016 [paper] [code]  We found visual attributes of object classes can be unsuperisedly learned by applying Independent Component Analysis on the softmax outputs of a trained ConvNet. We showed such attributes can be useful for object recognition by performing zero-shot learning experiments on the ImageNet dataset of over 20,000 object classes.

|

|

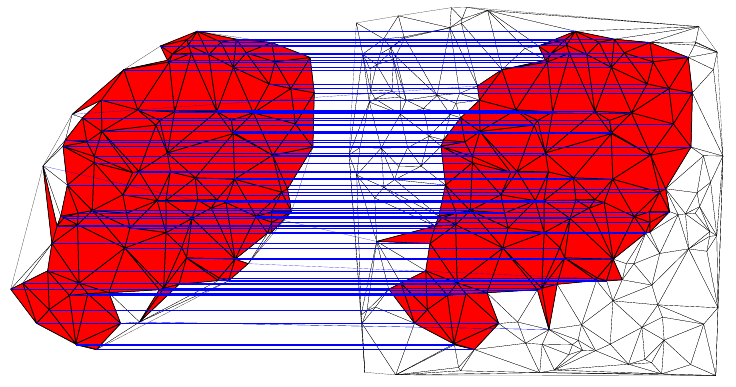

Yao Lu, Kaizhu Huang, Cheng-Lin Liu Pattern Recognition, 2016 [paper] [code]  We designed a fast graph matching algorithm with time complexity O(n^3) per iteration, where n is the size of a graph. We proved its convergence rate. It takes within 10 seconds to match two graphs of 1,000 nodes on a PC.

|

|

Yao Lu, Horst Bunke, Cheng-Lin Liu Graph-Based Representations in Pattern Recognition (GbR), 2013 [paper] [code]  We designed a fast Maximum Common Subgraph (MCS) algorithm for Planar Triangulation Graphs. Its time complexity is O(mnk), where n is the size of one graph, m is the size of the other graph and k is the size of their MCS.

|

|

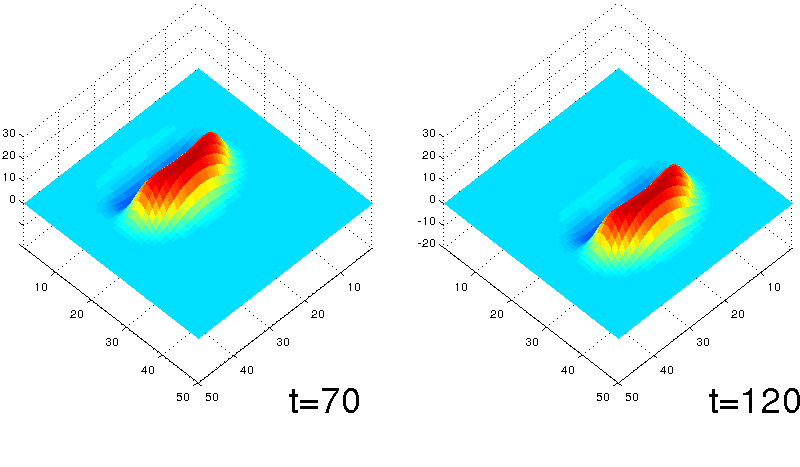

Yao Lu, Yuzuru Sato, Shun-ichi Amari Neural Computation, 2011 [paper] [code]  We found a unique traveling bump solution, which was unknown to exist before, in a set of two-dimensional neural network equations.

|

|

|

|

Yao Lu [code] |

|

Yao Lu [code] |

|

Han Xiao, Yao Lu [code] |

|

Site template from Jon Barron |